Pre-recorded Sessions: From 4 December 2020 | Live Sessions: 10 – 13 December 2020

4 – 13 December 2020

Pre-recorded Sessions: From 4 December 2020 | Live Sessions: 10 – 13 December 2020

4 – 13 December 2020

#SIGGRAPHAsia | #SIGGRAPHAsia2020

#SIGGRAPHAsia | #SIGGRAPHAsia2020

Date/Time: 04 – 13 December 2020

All presentations are available in the virtual platform on-demand.

Description: We present an open-source, wearable sanitizer that provides just-in-time, automatic dispensing of alcohol to the wearer's hand or nearby objects using sensors and programmable cues. We systematically explore the design space for wearable sanitizer aiming to create a device that not only seamlessly integrates with the user's body.

Description: MAScreen, a wearable LED display in the shape of a mask capable of sensing lip motion and speech, providing a real-time visual feedback on the vocal expression and the emotion behind the mask. It can transform from vocal data into text, emoji and another language.

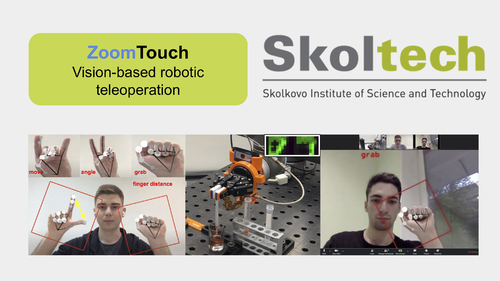

Description: ZoomTouch is a robot-human interaction system that allows an operator or a group of people to control the robotic arm via video calls application from anywhere in the world using DNN-based hand tracking. No glove or special hand tracking device, the camera is all you need.

Description: Dual Body was developed to be a telexistence system, one in which the user does not need to continuously operate an avatar robot but is still able to passively perceive feedback sensations when the robot performs actions. Such a method can highly reduces the latency perception and the fatigue feelings.

Description: We present ElaStick, a variable stiffness VR controller that allows experiencing the illusion of wielding objects of various flexibility. In our demo session, participants can experience firsthand (1) the stiffness of swords with different length and shape, and (2) changing flexibility due to interaction in VR.

Description: In this paper, we propose a balloon interface, a mid-air physical prop that affords direct single-handed manipulation in a safe manner. The system uses a spherical helium-filled balloon controlled by ultrasound phased array transducers as a physical prop that is safe to collide at high speed.

Description: We propose “HaptoMapping,” a novel projection-based AR system, that can present consistent visuo-haptic sensations on a non-planar physical surface without installing any visual displays to users and by keeping the quality of visual information. we introduce three application scenarios in daily scenes.

Description: HexTouch is a forearm-mounted robot that performs complementary touches in relation to the behaviors of a companion agent in VR. The robot consists of a series of tactors driven by servo motors that render specific tactile patterns to communicate emotions (fear, happiness, disgust, anger, and sympathy) and other notification cues.

Description: we propose KABUTO, a haptic display add-on for HMDs designed to induce whole-body interaction by the application of kinesthetic feedback. KABUTO can provide impact and resistance using flywheels and brake in response to various head movements as extensive head movements lead to dynamic movements throughout the whole body.

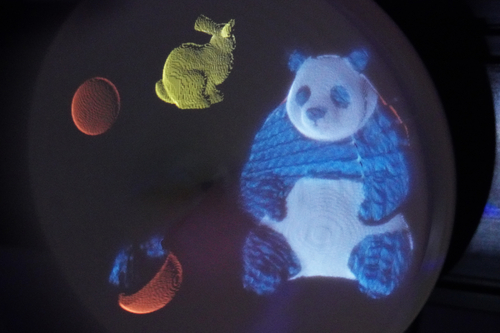

Description: In this study, a new swept volumetric 3D display is designed using physical materials as screens. We demonstrate that a realistic 3D object, such as a knitted wool or felt doll, can be perceived using a glasses-free solution in a wide-angle view with hidden-surface removal based on real-time viewpoint tracking.

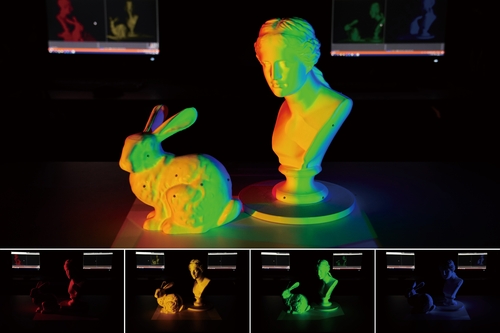

Description: We present a new dynamic projection mapping method based on pixel-parallel intensity control with multiple networked projectors. This method can solve the occlusion problem between objects well and seamlessly merge into augmented surfaces at high speed. The throughput of our system was 360 fps, which can hardly be noticeable visually.

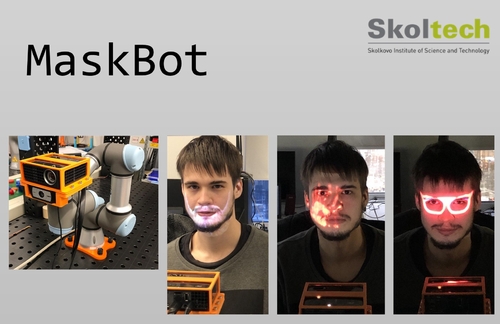

Description: We are introducing MaskBot, a real-time projection mapping system operated by a 6DoF collaborative robot. The collaborative robot locates the projector and camera in normal position to the face of the user, a webcam is used to detect the face. MaskBot projects different images on the face of the user.

Description: This paper proposes a high-speed human arm projection mapping system with accurate skin deformation. The latency of the developed system was 10 ms, which can hardly be noticeable visually. Compared to the conventional methods, this system provides more realistic experiences that enable digitally applied tattoos and special effects makeup.

Description: We propose "CoVR," a co-located VR sharing system for HMD and non-HMD users by projecting the view of HMD user with a projector mounted on an HMD. Not only is this system quite easy to install but triggers joint attention and facilitates interactions by presenting different information for both users.

Description: CoiLED Display is a flexible and scalable display that transforms ordinary objects in our environment into displays simply by coiling the device around them. It consists of a strip-shaped display unit with a single row of attached LEDs after a calibration process, as it is wrapped onto a target object.

Description: A designed pipeline for laser graphics displays that operate with minimal latency and without input from a computer. This pipeline contains an interaction shader, a novel approach for creating interactive graphics that can react within 4 ms of interaction and can run without input from a computer.

Description: Our system can display both heating and cooling sensations for hand via high-intensity airborne ultrasound.

Description: We present Bubble Mirror, a water pan with a camera that captures a visitor's face and displays it using electrolysis bubble clusters. The face image is displayed on the water surface by six grayscale electrolysis bubble clusters of 32 x 32 pixels.

Description: OmniPhotos are 360° VR photographs with motion parallax that can be casually captured in a single 360° video sweep. Capturing only takes 3 seconds and produces immersive high-quality 360° VR photographs that can be explored freely in virtual reality headsets.