Pre-recorded Sessions: From 4 December 2020 | Live Sessions: 10 – 13 December 2020

4 – 13 December 2020

Pre-recorded Sessions: From 4 December 2020 | Live Sessions: 10 – 13 December 2020

4 – 13 December 2020

#SIGGRAPHAsia | #SIGGRAPHAsia2020

#SIGGRAPHAsia | #SIGGRAPHAsia2020

Date: Thursday, December 10th

Time: 11:00am - 12:00pm

Venue: Zoom Room 9

Note: All live sessions will be screened on Singapore Time/GMT+8. Convert your time zone here.

Author(s)/Presenter(s):

Abstract:

We present an open-source, wearable sanitizer that provides just-in-time, automatic dispensing of alcohol to the wearer's hand or nearby objects using sensors and programmable cues. We systematically explore the design space for wearable sanitizer aiming to create a device that not only seamlessly integrates with the user's body.

Author(s)/Presenter(s):

Abstract:

MAScreen, a wearable LED display in the shape of a mask capable of sensing lip motion and speech, providing a real-time visual feedback on the vocal expression and the emotion behind the mask. It can transform from vocal data into text, emoji and another language.

Author(s)/Presenter(s):

Abstract:

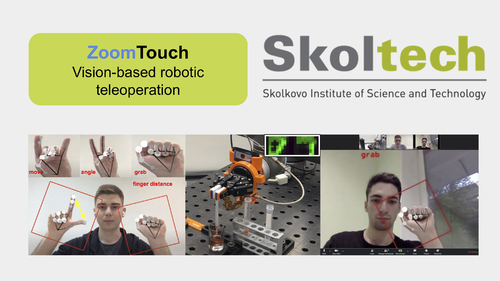

ZoomTouch is a robot-human interaction system that allows an operator or a group of people to control the robotic arm via video calls application from anywhere in the world using DNN-based hand tracking. No glove or special hand tracking device, the camera is all you need.

Author(s)/Presenter(s):

Abstract:

Dual Body was developed to be a telexistence system, one in which the user does not need to continuously operate an avatar robot but is still able to passively perceive feedback sensations when the robot performs actions. Such a method can highly reduces the latency perception and the fatigue feelings.