Pre-recorded Sessions: From 4 December 2020 | Live Sessions: 10 – 13 December 2020

4 – 13 December 2020

Pre-recorded Sessions: From 4 December 2020 | Live Sessions: 10 – 13 December 2020

4 – 13 December 2020

#SIGGRAPHAsia | #SIGGRAPHAsia2020

#SIGGRAPHAsia | #SIGGRAPHAsia2020

Date: Saturday, December 12th

Time: 11:00am - 12:00pm

Venue: Zoom Room 8

Note: All live sessions will be screened on Singapore Time/GMT+8. Convert your time zone here.

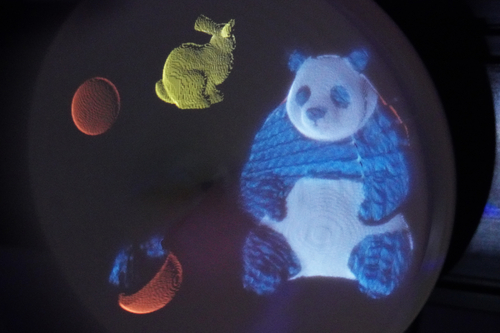

Abstract: In this study, a new swept volumetric 3D display is designed using physical materials as screens. We demonstrate that a realistic 3D object, such as a knitted wool or felt doll, can be perceived using a glasses-free solution in a wide-angle view with hidden-surface removal based on real-time viewpoint tracking.

Author(s)/Presenter(s):

Ray Asahina, Tokyo Institute of Technology, Japan

Takashi Nomoto, Tokyo Institute of Technology, Japan

Takatoshi Yoshida, University of Tokyo, Japan

Yoshihiro Watanabe, Tokyo Institute of Technology, Japan

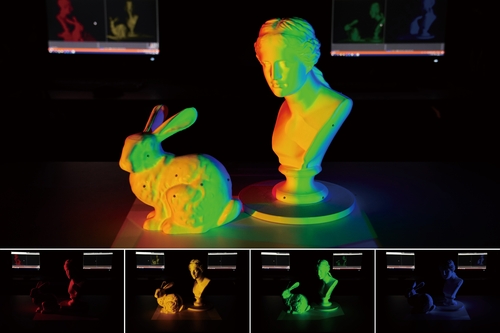

Abstract: We present a new dynamic projection mapping method based on pixel-parallel intensity control with multiple networked projectors. This method can solve the occlusion problem between objects well and seamlessly merge into augmented surfaces at high speed. The throughput of our system was 360 fps, which can hardly be noticeable visually.

Author(s)/Presenter(s):

Takashi Nomoto, Tokyo Institute of Technology, Japan

Wanlong Li, Tokyo Institute of Technology, Japan

Hao-Lun Peng, Tokyo Institute of Technology, Japan

Yoshihiro Watanabe, Tokyo Institute of Technology, Japan

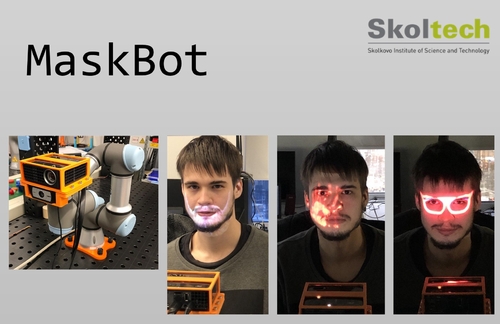

Abstract: We are introducing MaskBot, a real-time projection mapping system operated by a 6DoF collaborative robot. The collaborative robot locates the projector and camera in normal position to the face of the user, a webcam is used to detect the face. MaskBot projects different images on the face of the user.

Author(s)/Presenter(s):

Miguel Altamirano Cabrera, Skoltech, Russia

Igor Usachev, Skoltech, Russia

Juan Heredia, Skoltech, Russia

Jonathan Tirado, Skoltech, Russia

Aleksey Fedoseev, Skoltech, Russia

Dzmitry Tsetserukou, Skoltech, Russia

Abstract: This paper proposes a high-speed human arm projection mapping system with accurate skin deformation. The latency of the developed system was 10 ms, which can hardly be noticeable visually. Compared to the conventional methods, this system provides more realistic experiences that enable digitally applied tattoos and special effects makeup.

Author(s)/Presenter(s):

Hao-Lun Peng, Tokyo Institute of Technology, Japan

Yoshihiro Watanabe, Tokyo Institute of Technology, Japan

Abstract: We propose "CoVR," a co-located VR sharing system for HMD and non-HMD users by projecting the view of HMD user with a projector mounted on an HMD. Not only is this system quite easy to install but triggers joint attention and facilitates interactions by presenting different information for both users.

Author(s)/Presenter(s):

Ikuo Kamei, University of Tokyo, Japan

Changyo Han, University of Tokyo, Japan

Tafefumi Hiraki, Osaka University, Japan

Shogo Fukushima, University of Tokyo, Japan

Takeshi Naemura, University of Tokyo, Japan