Pre-recorded Sessions: From 4 December 2020 | Live Sessions: 10 – 13 December 2020

4 – 13 December 2020

Pre-recorded Sessions: From 4 December 2020 | Live Sessions: 10 – 13 December 2020

4 – 13 December 2020

#SIGGRAPHAsia | #SIGGRAPHAsia2020

#SIGGRAPHAsia | #SIGGRAPHAsia2020

Date: Thursday, December 10th

Time: 10:30am - 11:00am

Venue: Zoom Room 7

Note: All live sessions will be screened on Singapore Time/GMT+8. Convert your time zone here.

Abstract: Sketching is a foundational step in the design process. Decades of sketch processing research have produced algorithms for 3D shape interpretation, beautification, animation generation, colorization, etc. However, there is a mismatch between sketches created in the wild and the clean, sketch-like input required by these algorithms, preventing their adoption in practice. The recent flurry of sketch vectorization, simplification, and cleanup algorithms could be used to bridge this gap. However, they differ wildly in the assumptions they make on the input and output sketches. We present the first benchmark to evaluate and focus sketch cleanup research. Our dataset consists of 281 sketches obtained in the wild and a curated subset of 101 sketches. For this curated subset along with 40 sketches from previous work, we commissioned manual vectorizations and multiple ground truth cleaned versions by professional artists. The sketches span artistic and technical categories and were created by a variety of artists with different styles. Most sketches have Creative Commons licenses; the rest permit academic use. Our benchmark's metrics measure the similarity of automatically cleaned rough sketches to artist-created ground truth; the consistency of automatic cleanup algorithms' output when faced with different variations of the input; the ambiguity and messiness of rough sketches; and low-level properties of the output parameterized curves. Our evaluation identifies shortcomings among state-of-the-art cleanup algorithms and discusses open problems for future research.

Author(s)/Presenter(s):

Chuan Yan, George Mason University, United States of America

David Vanderhaeghe, Institut de Recherche en Informatique de Toulouse (IRIT), Université de Toulouse, France

Yotam Gingold, George Mason University, United States of America

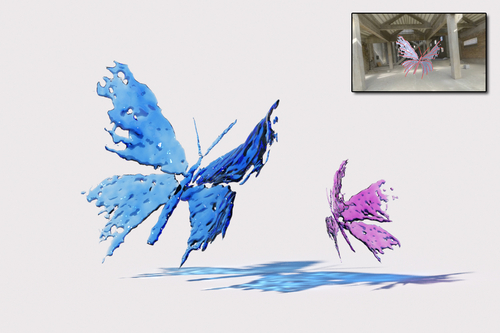

Abstract: Splashing is one of the most fascinating liquid phenomena in the real world and it is favored by artists to create stunning visual effects, both statically and dynamically. Unfortunately, the generation of complex and specialized liquid splashes is a challenging task and often requires considerable time and effort. In this paper, we present a novel system that synthesizes realistic liquid splashes from simple user sketch input. Our system adopts a conditional generative adversarial network (cGAN) trained with physics-based simulation data to produce raw liquid splash models from input sketches, and then applies model refinement processes to further improve their small-scale details. The system considers not only the trajectory of every user stroke, but also its speed, which makes the splash model simulation-ready with its underlying 3D flow. Compared with simulation-based modeling techniques through trials and errors, our system offers flexibility, convenience and intuition in liquid splash design and editing. We evaluate the usability and the efficiency of our system in an immersive virtual reality environment. Thanks to this system, an amateur user can now generate a variety of realistic liquid splashes in just a few minutes.

Author(s)/Presenter(s):

Guowei Yan, Ohio State University, United States of America

Zhili Chen, ByteDance, United States of America

Jimei Yang, Adobe Research, United States of America

Huamin Wang, Ohio State University, United States of America

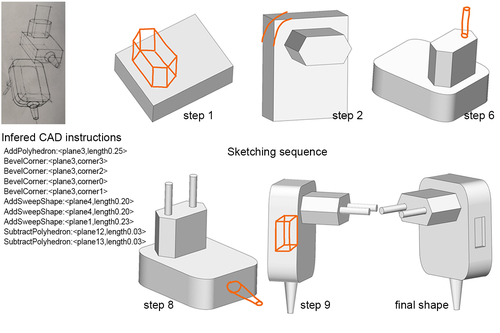

Abstract: We present a sketch-based CAD modeling system, where users create objects incrementally by sketching the desired shape edits, which our system automatically translates to CAD operations. Our approach is motivated by the close similarities between the steps industrial designers follow to draw 3D shapes, and the operations CAD modeling systems offer to create similar shapes. To overcome the strong ambiguity with parsing 2D sketches, we observe that in a sketching sequence, each step makes sense and can be interpreted in the context of what has been drawn before. In our system, this context corresponds to a partial CAD model, inferred in the previous steps, which we feed along with the input sketch to a deep neural network in charge of interpreting how the model should be modified by that sketch. Our deep network architecture then recognizes the intended CAD operation and segments the sketch accordingly, such that a subsequent optimization estimates the parameters of the operation that best fit the segmented sketch strokes. Since there exists no datasets of paired sketching and CAD modeling sequences, we train our system by generating synthetic sequences of CAD operations that we render as line drawings. We present a proof of concept realization of our algorithm supporting four frequently used CAD operations. Using our system, participants are able to quickly model a large and diverse set of objects, demonstrating Sketch2CAD to be an alternate way of interacting with current CAD modeling systems.

Author(s)/Presenter(s):

Changjian Li, University College London (UCL), United Kingdom

Hao Pan, Microsoft Research Asia, China

Adrien Bousseau, Institut national de recherche en informatique et en automatique (INRIA) Université Nice Cote d'Azur, France

Niloy J. Mitra, University College London, Adobe Research, United Kingdom

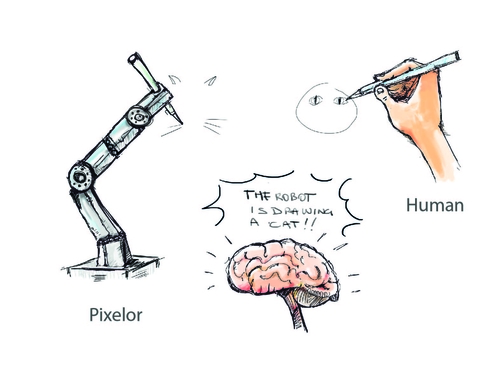

Abstract: We present the first competitive drawing agent Pixelor that exhibits human-level performance at a Pictionary-like sketching game, where the participant whose sketch is recognized first is a winner. Our AI agent can autonomously sketch a given visual concept, and achieve a recognizable rendition as quickly or faster than a human competitor. The key to victory for the agent is to learn the optimal stroke sequencing strategies that generate the most recognizable and distinguishable strokes first. Training Pixelor is done in two steps. First, we infer the optimal stroke order that maximizes early recognizability of human training sketches. Second, this order is used to supervise the training of a sequence-to-sequence stroke generator. Our key technical contributions are a tractable search of the exponential space of orderings using neural sorting; and an improved Seq2Seq Wasserstein (S2S-WAE) generator that uses an optimal-transport loss to accommodate the multi-modal nature of the optimal stroke distribution. Our analysis shows that Pixelor is better than the human players of the Quick, Draw! game, under both AI and human judging of early recognition. To analyze the impact of human competitors’ strategies, we conducted a further human study with participants being given unlimited thinking time and training in early recognizability by feedback from an AI judge. The study shows that humans do gradually improve their strategies with training, but overall Pixelor still matches human performance. We will release the code and the dataset, optimized for the task of early recognition, upon acceptance.

Author(s)/Presenter(s):

Tao Xiang, University of Surrey, United Kingdom

Yulia Gryaditskaya, CVSSP, University of Surrey, United Kingdom

Yi-Zhe Song, University of Surrey, United Kingdom

Ayan Kumar Bhunia, University of Surrey, United Kingdom

Ayan Das, University of Surrey, United Kingdom

Umar Riaz Muhammad, CVSSP, University of Surrey, United Kingdom

Yongxin Yang, University of Surrey, United Kingdom

Timothy Hospedales, University of Edinburgh, United Kingdom

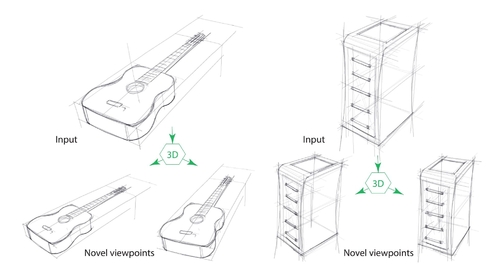

Abstract: We present the first algorithm capable of automatically lifting real-world, vector-format, industrial design sketches into 3D, by detecting 3D intersections and estimating 3D depth of artist strokes. Targeting real-world sketches raises numerous challenges due to inaccuracies, use of overdrawn strokes, and construction lines. In particular, while construction lines convey important 3D information, they add significant clutter and introduce multiple accidental 2D intersections. Our algorithm exploits the geometric cues provided by the construction lines and lifts them to 3D by computing their intended 3D intersections and depths. Once lifted to 3D, these lines provide valuable geometric constraints that we leverage to infer the 3D shape of other artist drawn strokes. The core challenge we address is inferring the 3D connectivity of construction and other lines from their 2D projections by separating 2D intersections into 3D intersections and accidental occlusions. We efficiently address this complex combinatorial problem using a dedicated search algorithm that leverages observations about designer drawing preferences, and uses those to explore only the most likely solutions of the 3D intersection detection problem. We demonstrate that our separator outputs are of comparable quality to human annotation and demonstrate that the 3D structures we recover enable a range of design editing and visualization applications, including novel view synthesis and 3D-aware editing of the depicted shape.

Author(s)/Presenter(s):

Yulia Gryaditskaya, CVSSP, University of Surrey; Institut national de recherche en informatique et en automatique (INRIA) Université Nice Cote d'Azur, United Kingdom

Felix Hahnlein, Institut national de recherche en informatique et en automatique (INRIA) Université Nice Cote d'Azur, France

Chenxi Liu, University of British Columbia, Canada

Alla Sheffer, University of British Columbia, Canada

Adrien Bousseau, Institut national de recherche en informatique et en automatique (INRIA) Université Nice Cote d'Azur, France

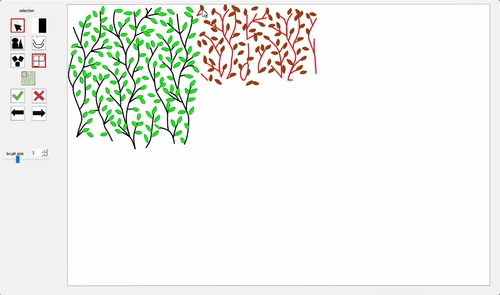

Abstract: Repetitive patterns are ubiquitous in natural and man-made objects, with a variety of development tools and methods. Manual authoring provides unprecedented degree of freedom and control, but can require significant artistic expertise and manual labor. Computational methods can automate parts of the manual creation process, but are mainly tailored for discrete pixels or elements instead of more general continuous structures. We propose an example-based method to synthesize continuous curve patterns from exemplars. Our main idea is to extend prior sample-based discrete element synthesis methods to consider not only sample positions (geometry) but also their connections (topology). Since continuous structures can exhibit higher complexity than discrete elements, we also propose robust, hierarchical synthesis to enhance output quality. Our algorithm can generate a variety of continuous curve patterns fully automatically. For further quality improvement and customization, we also present an autocomplete user interface to facilitate interactive creation and iterative editing. We evaluate our methods and interface via different patterns, ablation studies, and comparisons with alternative methods.

Author(s)/Presenter(s):

Peihan Tu, University of Maryland College Park, United States of America

Li-Yi Wei, Adobe Research, United States of America

Koji Yatani, University of Tokyo, Japan

Takeo Igarashi, University of Tokyo, Japan

Matthias Zwicker, University of Maryland College Park, United States of America