Pre-recorded Sessions: From 4 December 2020 | Live Sessions: 10 – 13 December 2020

4 – 13 December 2020

Pre-recorded Sessions: From 4 December 2020 | Live Sessions: 10 – 13 December 2020

4 – 13 December 2020

#SIGGRAPHAsia | #SIGGRAPHAsia2020

#SIGGRAPHAsia | #SIGGRAPHAsia2020

Date: Sunday, December 13th

Time: 12:30pm - 1:00pm

Venue: Zoom Room 3

Note: All live sessions will be screened on Singapore Time/GMT+8. Convert your time zone here.

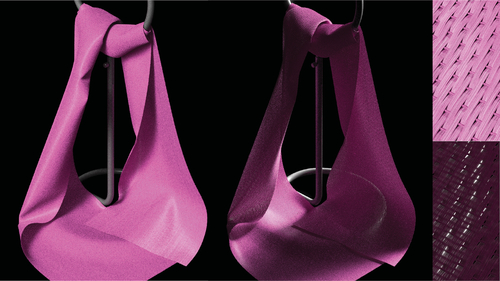

Abstract: Simulating the appearance of woven fabrics is challenging due to the complex interplay of lighting between the constituent yarns and fibers. Conventional surface-based models lack the fidelity and details for producing realistic close-up renderings. Micro-appearance models, on the other hand, can produce highly detailed renderings by depicting fabrics fiber-by-fiber, but become expensive when handling large pieces of clothing. Further, neither surface-based nor micro-appearance model has not been shown in practice to match measurements of complex anisotropic reflection and transmission simultaneously. In this paper, we introduce a practical appearance model for woven fabrics. We model the structure of a fabric at the ply level and simulate the local appearance of fibers making up each ply. Our model accounts for both reflection and transmission of light and is capable of matching physical measurements better than prior methods including fiber based techniques. Compared to existing micro-appearance models, our model is light-weight and scales to large pieces of clothing.

Author(s)/Presenter(s):

Zahra Montazeri, University of California Irvine, Luxion Inc, United States of America

Soren B. Gammelmark, Luxion Inc, Denmark

Shuang Zhao, University of California Irvine, United States of America

Henrik W. Jensen, Luxion Inc, University of California San Diego, United States of America

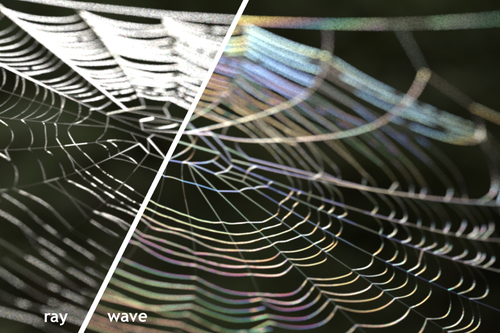

Abstract: Existing fiber scattering models in rendering are all based on tracing rays through fiber geometry, but for small fibers diffraction and interference are non-negligible, so relying on ray optics can result in appearance errors. This paper presents the first wave optics based fiber scattering model, introducing an azimuthal scattering function that comes from a full wave simulation. Solving Maxwell's equations for a straight fiber of constant cross section illuminated by a plane wave reduces to solving for a 3D electromagnetic field in a 2D domain, and our fiber scattering simulator solves this 2.5D problem efficiently using the boundary element method (BEM). From the resulting fields we compute extinction, absorption, and far-field scattering distributions, which we use to simulate shadowing and scattering by fibers in a path tracer. We validate our path tracer against the wave simulation and the simulation against a measurement of diffraction from a single textile fiber. Our results show that our approach can reproduce a wide range of fibers with different sizes, cross-sections, and material properties, including textile fibers, animal fur, and human hair. The renderings include color effects, softening of sharp features, and strong forward scattering that are not predicted by traditional ray based models, though the two approaches produce similar appearance for complex fiber assemblies under some conditions.

Author(s)/Presenter(s):

Mengqi Xia, Cornell University, United States of America

Bruce Walter, Cornell University, United States of America

Eric Michielssen, University of Michigan, United States of America

David Bindel, Cornell University, United States of America

Steve Marschner, Cornell University, United States of America

Abstract: The unique and visually mesmerizing appearance of pearlescent materials has made them an indispensable ingredient in a diverse array of applications including packaging, ceramics, printing, and cosmetics. In contrast to their natural counterparts, such synthetic examples of pearlescence are created by dispersing microscopic interference pigments within a dielectric resin. The resulting space of materials comprises an enormous range of different phenomena ranging from smooth lustrous appearance reminiscent of pearl to highly directional metallic gloss, along with a gradual change in color that depends on the angle of observation and illumination. All of these properties arise due to a complex optical process involving multiple scattering from platelets characterized by wave-optical interference. This article introduces a flexible model for simulating the optics of such pearlescent 3D microstructures. Following a thorough review of the properties of currently used pigments and manufacturing-related effects that influence pearlescence, we propose a new model which expands the range of appearance that can be represented, and closely reproduces the behavior of measured materials, as we show in our comparisons. Using our model, we conduct a systematic study of the parameter space and its relationship to different aspects of pearlescent appearance. We observe that several previously ignored parameters have a substantial impact on the material's optical behavior, including the multi-layered nature of modern interference pigments, correlations in the orientation of pigment particles, and variability in their properties (e.g. thickness). The utility of a general model for pearlescence extends far beyond computer graphics: inverse and differentiable approaches to rendering are increasingly used to disentangle the physics of scattering from real-world observations. Our approach could inform such reconstructions to enable the predictive design of tailored pearlescent materials.

Author(s)/Presenter(s):

Ibón Guillén, Universidad de Zaragoza - I3A, Spain

Julio Marco, Universidad de Zaragoza - I3A, Spain

Diego Gutierrez, Universidad de Zaragoza - I3A, Spain

Wenzel Jakob, EPFL, Switzerland

Adrian Jarabo, Universidad de Zaragoza, Centro Universitario de la Defensa Zaragoza, Spain

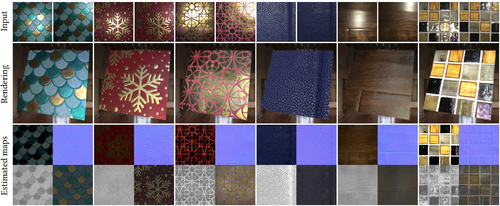

Abstract: We address the problem of reconstructing spatially-varying BRDFs from a small set of image measurements. This is a fundamentally under-constrained problem, and previous work has relied on using various regularization priors or on capturing many images to produce plausible results. In this work, we present MaterialGAN, a deep generative convolutional network based on StyleGAN2, trained to synthesize realistic SVBRDF parameter maps. We show that MaterialGAN can be used as a powerful material prior in an inverse rendering framework: we optimize in its latent representation to generate material maps that match the appearance of the captured images when rendered. We demonstrate this framework on the task of reconstructing SVBRDFs from images captured under flash illumination using a hand-held mobile phone. Our method succeeds in producing plausible material maps that accurately reproduce the target images, and outperforms previous state-of-the-art material capture methods in evaluations on both synthetic and real data. Furthermore, our GAN-based latent space allows for high-level semantic material editing operations such as generating material variations and material morphing.

Author(s)/Presenter(s):

Yu Guo, University of California Irvine, United States of America

Cameron Smith, Adobe Research, United States of America

Milos Hasan, Adobe Research, United States of America

Kalyan Sunkavalli, Adobe Research, United States of America

Shuang Zhao, University of California Irvine, United States of America

Abstract: We introduce a novel ink selection method for spectral printing. The ink selection algorithm takes a spectral image and a set of inks as input, and selects a subset of those inks that results in optimal spectral reproduction. We put forward an optimization formulation that searches a huge combinatorial space based on mixed integer programming. We show that solving this optimization in the conventional reflectance space is intractable. The main insight of this work is to solve our problem in the spectral absorbance space with a linearized formulation. The proposed ink selection copes with large-size problems for which previous methods are hopeless. We demonstrate the effectiveness of our method in a concrete setting by lifelike reproduction of handmade paintings. For a successful spectral reproduction of high-resolution paintings, we explore their spectral absorbance estimation,efficient coreset representation, and accurate data-driven reproduction.

Author(s)/Presenter(s):

Navid Ansari, Max-Planck-Institut für Informatik, Germany

Omid Alizadeh-Mousavi, Depsys SA, Switzerland

Hans-Peter Seidel, Max-Planck-Institut für Informatik, Germany

Vahid Babaei, Max-Planck-Institut für Informatik, Germany