Pre-recorded Sessions: From 4 December 2020 | Live Sessions: 10 – 13 December 2020

4 – 13 December 2020

Pre-recorded Sessions: From 4 December 2020 | Live Sessions: 10 – 13 December 2020

4 – 13 December 2020

#SIGGRAPHAsia | #SIGGRAPHAsia2020

#SIGGRAPHAsia | #SIGGRAPHAsia2020

Date: Friday, December 11th

Time: 1:00pm - 1:30pm

Venue: Zoom Room 6

Note: All live sessions will be screened on Singapore Time/GMT+8. Convert your time zone here.

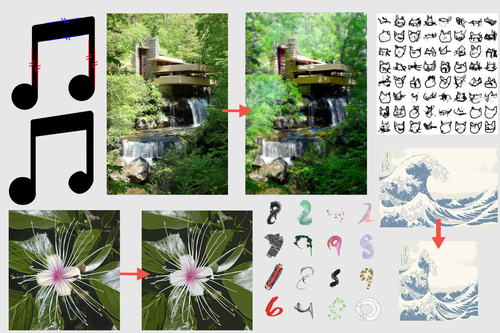

Abstract: We introduce a differentiable rasterizer that bridges the vector graphics and raster image domains, enabling powerful raster-based loss functions, optimization procedures, and machine learning techniques to edit and generate vector content. We observe that vector graphics rasterization is differentiable after pixel prefiltering. Our differentiable rasterizer offers two prefiltering options: an analytical prefiltering technique and a multisampling anti-aliasing technique. The analytical variant is faster but can suffer from artifacts such as conflation. The multisampling variant is still efficient, and can render high-quality images while computing unbiased gradients for each pixel with respect to curve parameters. We demonstrate that our rasterizer enables new applications, including a vector graphics editor guided by image metrics, a painterly rendering algorithm that fits vector primitives to an image by minimizing a deep perceptual loss function, new vector graphics editing algorithms that exploit well-known image processing methods such as seam carving, and deep generative models that generate vector content from raster-only supervision under a VAE or GAN training objective.

Author(s)/Presenter(s):

Tzu-Mao Li, MIT CSAIL, United States of America

Mike Lukac, Adobe Research, United States of America

Michaël Gharbi, Adobe Research, United States of America

Jonathan Ragan-Kelley, MIT CSAIL, United States of America

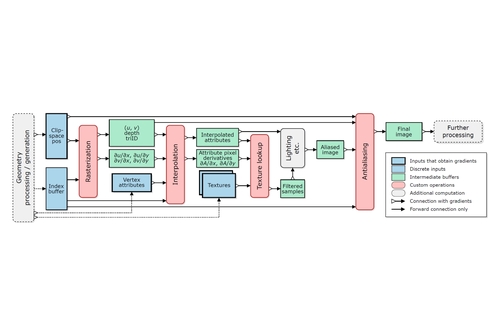

Abstract: We present a modular differentiable renderer design that yields performance superior to previous methods by leveraging existing, highly optimized hardware graphics pipelines. Our design supports all crucial operations in a modern graphics pipeline: rasterizing large numbers of triangles, attribute interpolation, filtered texture lookups, as well as user-programmable shading and geometry processing, all in high resolutions. Our modular primitives allow custom, high-performance graphics pipelines to be built directly within automatic differentiation frameworks such as PyTorch or TensorFlow. As a motivating application, we formulate facial performance capture as an inverse rendering problem and show that it can be solved efficiently using our tools. Our results indicate that this simple and straightforward approach achieves excellent geometric correspondence between rendered results and reference imagery.

Author(s)/Presenter(s):

Samuli Laine, NVIDIA, Finland

Janne Hellsten, NVIDIA, Finland

Tero Karras, NVIDIA, Finland

Yeongho Seol, NVIDIA, South Korea

Jaakko Lehtinen, NVIDIA, Aalto University, Finland

Timo Aila, NVIDIA, Finland

Abstract: Capturing the 3D geometry of transparent objects is a challenging task, ill-suited for general-purpose scanning and reconstruction techniques, since these cannot handle specular light transport phenomena. Existing state-of-the-art methods, designed specifically for this task, either involve a complex setup to reconstruct complete refractive ray paths, or leverage a data-driven approach based on synthetic training data. In either case, the reconstructed 3D models suffer from over-smoothing and loss of fine detail. This paper introduces a novel, high precision, 3D acquisition and reconstruction method for solid transparent objects. Using a static background with a coded pattern, we establish a mapping between the camera view rays and locations on the background. Differentiable tracing of refractive ray paths is then used to directly optimize a 3D mesh approximation of the object, while simultaneously ensuring silhouette consistency and smoothness. Extensive experiments and comparisons demonstrate the superior accuracy of our method.

Author(s)/Presenter(s):

Jiahui Lyu, Shenzhen University, China

Bojian Wu, Alibaba Group, China

Dani Lischinski, The Hebrew University of Jerusalem, Israel

Daniel Cohen-Or, Shenzhen University, Tel Aviv University, Israel

Hui Huang, Shenzhen University, China

Abstract: We present MATch, a method to automatically convert photographs of material samples into production-grade procedural material models. At the core of MATch is a new library DiffMat that provides differentiable building blocks for constructing procedural materials, and automatic translation of large-scale procedural models, with hundreds to thousands of node parameters, into differentiable node graphs. Combining these translated node graphs with a rendering layer yields an end-to-end differentiable pipeline that maps node graph parameters to rendered images. This facilitates the use of gradient-based optimization to estimate the parameters such that the resulting material, when rendered, matches the target image appearance, as quantified by a style transfer loss. In addition, we propose a deep neural feature-based graph selection and parameter initialization method that efficiently scales to a large number of procedural graphs. We evaluate our method on both rendered synthetic materials and real materials captured as flash photographs. We demonstrate that MATch can reconstruct more accurate, general, and complex procedural materials compared to the state-of-the-art. Moreover, by producing a procedural output, we unlock capabilities such as constructing arbitrary-resolution material maps and parametrically editing the material appearance.

Author(s)/Presenter(s):

Liang Shi, MIT CSAIL, United States of America

Beichen Li, MIT CSAIL, United States of America

Miloš Hašan, Adobe Inc., United States of America

Kalyan Sunkavalli, Adobe Inc., United States of America

Tamy Boubekeur, Adobe Inc., France

Radomir Mech, Adobe Inc., United States of America

Wojciech Matusik, MIT CSAIL, United States of America

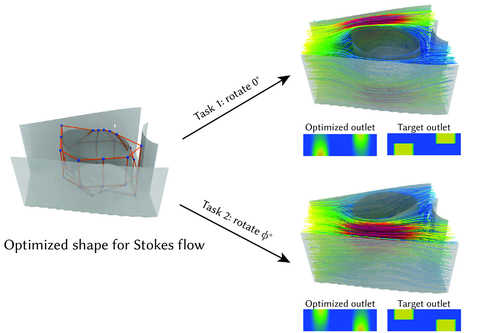

Abstract: We present a method for performance-driven optimization of fluidic devices. In our approach, engineers provide a high-level specification of a device using parametric surfaces for the fluid-solid boundaries. They also specify desired flow properties for inlets and outlets of the device. Our computational approach optimizes the boundary of the fluidic device such that its steady-state flow matches desired flow at outlets. In order to deal with computational challenges of this task, we propose an efficient, differentiable Stokes flow solver. Our solver provides explicit access to gradients of performance metrics with respect to the parametric boundary representation. This key feature allows us to couple the solver with efficient gradient-based optimization methods. We demonstrate the efficacy of this approach on designs of five complex 3D fluidic systems. Our approach makes an important step towards practical computational design tools for high-performance fluidic devices.

Author(s)/Presenter(s):

Tao Du, MIT, United States of America

Kui Wu, MIT, United States of America

Andrew Spielberg, MIT, United States of America

Wojciech Matusik, MIT, United States of America

Bo Zhu, Dartmouth College, United States of America

Eftychios Sifakis, University of Wisconsin Madison, United States of America