Pre-recorded Sessions: From 4 December 2020 | Live Sessions: 10 – 13 December 2020

4 – 13 December 2020

Pre-recorded Sessions: From 4 December 2020 | Live Sessions: 10 – 13 December 2020

4 – 13 December 2020

#SIGGRAPHAsia | #SIGGRAPHAsia2020

#SIGGRAPHAsia | #SIGGRAPHAsia2020

Date: Sunday, December 13th

Time: 9:00am - 9:30am

Venue: Zoom Room 2

Note: All live sessions will be screened on Singapore Time/GMT+8. Convert your time zone here.

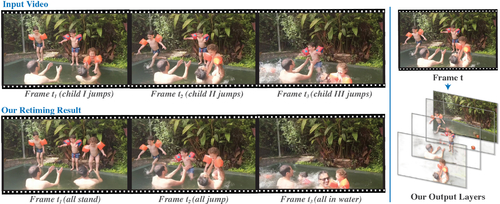

Abstract: We present a method for retiming people in an ordinary, natural video --- manipulating and editing the time in which different motions of individuals in the video occur. We can temporally align different motions, change the speed of certain actions (speeding up/slowing down, or entirely "freezing" people), or "erase" selected people from the video altogether. We achieve these effects computationally via a dedicated learning-based layered video representation, where each frame in the video is decomposed into separate RGBA layers, representing the appearance of different people in the video. A key property of our model is that it not only disentangles the direct motions of each person in the input video, but also correlates each person automatically with the scene changes they generate---e.g., shadows, reflections, and motion of loose clothing. The layers can be individually retimed and recombined into a new video, allowing us to achieve realistic, high-quality renderings of retiming effects for real-world videos depicting complex actions and involving multiple individuals, including dancing, trampoline jumping, or group running.

Author(s)/Presenter(s):

Erika Lu, University of Oxford, United States of America

Forrester Cole, Google Research, United States of America

Tali Dekel, Google Research, United States of America

Weidi Xie, University of Oxford, United Kingdom

Andrew Zisserman, University of Oxford, United Kingdom

David Salesin, Google Research, United States of America

William T. Freeman, Google Research, United States of America

Michael Rubinstein, Google Research, United States of America

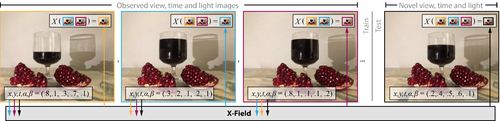

Abstract: We suggest to represent an X-Fields, a set of 2D images taken across different view, time or illumination conditions i.e. video, light field, reflectance fields or combinations thereof by learning a neural network (NN) to map their view, time or light coordinates to 2D images. Executing this NN at new coordinates results in joint view, time or light interpolation. The key idea to make this workable is a NN that already knows the "basic tricks" of graphics (lighting, 3D projection, occlusion) in a hard-coded and differentiable form. The NN represents the implicit input to that rendering as an implicit map, that for any view, time, light coordinate and for any pixel can quantify how it will move if view, time or light coordinates change (Jacobian of pixel position in respect to view, time, illumination, etc). An X-Fields is trained for one scene within minutes, leading to a compact set of trainable parameters and consequently real-time navigation in view, time and illumination.

Author(s)/Presenter(s):

Mojtaba Bemana, MPI Informatik, Germany

Karol Myszkowski, MPI Informatik, Germany

Hans-Peter Seidel, MPI Informatik, Germany

Tobias Ritschel, University College London, United Kingdom

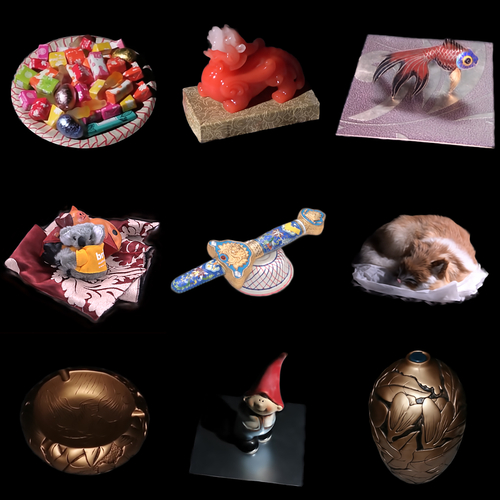

Abstract: We present deferred neural lighting, a novel method for free-viewpoint relighting from unstructured photographs of a scene captured with handheld devices. Our method leverages a scene-dependent neural rendering network for relighting a rough geometric proxy with learnable neural textures. Key to making the rendering network lighting aware are radiance cues: global illumination renderings of a rough proxy geometry of the scene for a small set of basis materials and lit by the target lighting. As such, the light transport through the scene is never explicitely modeled, but resolved at rendering time by a neural rendering network. We demonstrate that the neural textures and neural renderer can be trained end-to-end from unstructured photographs captured with a double hand-held camera setup that concurrently captures the scene while being lit by only one of the cameras' flash lights. In addition, we propose a novel augmentation refinement strategy that exploits the linearity of light transport to extend the relighting capabilities of the neural rendering network to support other lighting types (e.g., environment lighting) beyond the lighting used during acquisition (i.e., flash lighting). We demonstrate our deferred neural lighting solution on a variety of real-world and synthetic scenes exhibiting a wide range of material properties, light transport effects, and geometrical complexity.

Author(s)/Presenter(s):

Duan Gao, BNRist, Tsinghua University, China

Guojun Chen, Microsoft Research Asia, China

Yue Dong, Microsoft Research Asia, China

Pieter Peers, College of William & Mary, United States of America

Kun Xu, BNRist, Tsinghua University, China

Xin Tong, Microsoft Research Asia, China

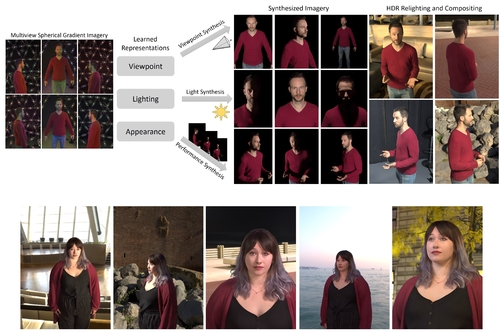

Abstract: The increasing demand for 3D content in augmented and virtual reality has motivated the development of volumetric performance capture systems such as the Light Stage. Recent advances are pushing free viewpoint relightable videos of dynamic human performances closer to photorealistic quality. However, despite significant efforts, these sophisticated systems are limited by reconstruction and rendering algorithms which do not fully model complex 3D structures and higher order light transport effects such as global illumination and sub-surface scattering. In this paper, we propose a system that combines traditional geometric pipelines with a neural rendering scheme to generate photorealistic renderings of dynamic performances under desired viewpoint and lighting. Our system leverages deep neural networks that model the classical rendering process to learn implicit features that represent the view-dependent appearance of the subject independent of the geometry layout, allowing for generalization to unseen subject poses and even novel subject identity. Detailed experiments and comparisons demonstrate the efficacy and versatility of our method to generate high-quality results, significantly outperforming the existing state-of-the-art solutions.

Author(s)/Presenter(s):

Philip Davidson, Google Inc., United States of America

Daniel Erickson, Google Inc., United States of America

Yinda Zhang, Google Inc., United States of America

Jonathan Taylor, Google Inc., United States of America

Sofien Bouaziz, Google Inc., United States of America

Chloe Legendre, Google Inc., United States of America

Wan-Chun Ma, Google Inc., United States of America

Ryan Overbeck, Google Inc., United States of America

Thabo Beeler, Google Inc., United States of America

Paul Debevec, Google Inc., United States of America

Shahram Izadi, Google Inc., United States of America

Christian Theobalt, Max-Planck-Institut für Informatik, Germany

Christoph Rhemann, Google Inc., United States of America

Sean Fanello, Google Inc., United States of America

Abhimitra Meka, Google Inc., Max-Planck-Institut für Informatik, United States of America

Rohit Pandey, Google Inc., United States of America

Christian Haene, Google Inc., United States of America

Sergio Orts-Escolano, Google Inc., United States of America

Peter Barnum, Google Inc., United States of America

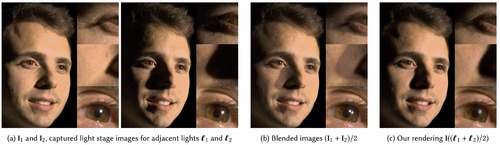

Abstract: The light stage has been widely used in computer graphics for the past two decades, primarily to enable the relighting of human faces. By capturing the appearance of the human subject under different light sources, one obtains the light transport matrix of that subject, which enables image-based relighting in novel environments. However, due to the finite number of lights in the stage, the light transport matrix only represents a sparse sampling on the entire sphere. As a consequence, relighting the subject with a point light or a directional source that does not coincide exactly with one of the lights in the stage requires interpolation and resampling the images corresponding to nearby lights, and this leads to ghosting shadows, aliased specularities, and other artifacts. To ameliorate these artifacts and produce better results under arbitrary high-frequency lighting, this paper proposes a learning-based solution for the ``super-resolution'' of scans of human faces taken from a light stage.Given an arbitrary ``query'' light direction, our method aggregates the captured images corresponding to neighboring lights in the stage, and uses a neural network to synthesize a rendering of the face that appears to be illuminated by a ``virtual'' light source at the query location.This neural network must circumvent the inherent aliasing and regularity of the light stage data that was used for training, which we accomplish through the use of regularized traditional interpolation methods within our network.Our learned model is able to produce renderings for arbitrary light directions that exhibit realistic shadows and specular highlights, and is able to generalize across a wide variety of subjects. Our super-resolution approach enables more accurate renderings of human subjects under detailed environment maps, or the construction of simpler light stages that contain fewer light sources while still yielding comparable quality renderings as light stages with more densely sampled lights.

Author(s)/Presenter(s):

Tiancheng Sun, University of California San Diego, United States of America

Zexiang Xu, University of California San Diego, United States of America

Xiuming Zhang, Massachusetts Institute of Technology, United States of America

Sean Fanello, Google Inc., United States of America

Christoph Rhemann, Google Inc., United States of America

Paul Debevec, Google Inc., United States of America

Yun-Ta Tsai, Google Research, United States of America

Jonathan T. Barron, Google Research, United States of America

Ravi Ramamoorthi, University of California San Diego, United States of America