Pre-recorded Sessions: From 4 December 2020 | Live Sessions: 10 – 13 December 2020

4 – 13 December 2020

Pre-recorded Sessions: From 4 December 2020 | Live Sessions: 10 – 13 December 2020

4 – 13 December 2020

#SIGGRAPHAsia | #SIGGRAPHAsia2020

#SIGGRAPHAsia | #SIGGRAPHAsia2020

Date: Thursday, December 10th

Time: 1:00pm - 1:30pm

Venue: Zoom Room 4

Note: All live sessions will be screened on Singapore Time/GMT+8. Convert your time zone here.

Abstract: Holographic optical elements (HOEs) have a wide range of applications, including their emerging use in virtual and augmented reality displays, but their design and fabrication have remained largely limited to configurations using simple wavefronts. In this paper, we present a pipeline for the design, optimization, and fabrication of complex, customized HOEs that enhances their imaging performance and enables new applications. In particular, we propose an optimization method for grating vector fields that accounts for the unique selectivity properties of HOEs. We further show how this pipeline can be applied to two distinct HOE fabrication methods. The first uses a pair of freeform refractive elements to manufacture HOEs with high optical quality and precision. The second uses a holographic printer with two wavefront-modulating arms, enabling rapid prototyping. We propose a unified wavefront decomposition framework suitable for both fabrication approaches. To demonstrate the versatility of these methods, we fabricate and characterize a series of specialized HOEs, including an aspheric lens, a head-up display lens, a lens array, and, for the first time, a full-color caustic projection element.

Author(s)/Presenter(s):

Changwon Jang, Facebook Reality Labs, United States of America

Olivier Mercier, Facebook Reality Labs, United States of America

Kiseung Bang, Facebook Reality Labs, United States of America

Gang Li, Facebook Reality Labs, United States of America

Yang Zhao, Facebook Reality Labs, United States of America

Douglas Lanman, Facebook Reality Labs, United States of America

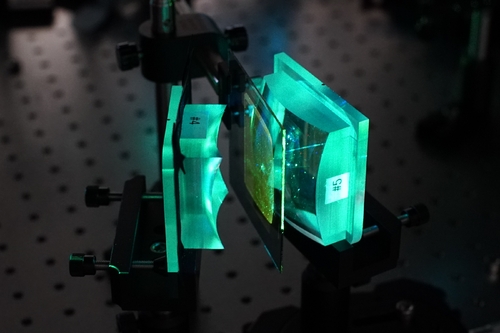

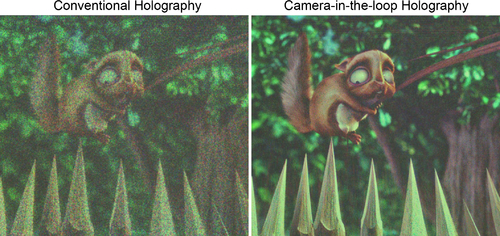

Abstract: Holographic displays promise unprecedented capabilities for direct-view displays as well as virtual and augmented reality applications. However, one of the biggest challenges for computer-generated holography (CGH) is the fundamental tradeoff between algorithm runtime and achieved image quality, which has prevented high-quality holographic image synthesis at fast speeds. Moreover, the image quality achieved by most holographic displays is low, due to the mismatch between the optical wave propagation of the display and its simulated model. Here, we develop an algorithmic CGH framework that achieves unprecedented image fidelity and real-time framerates. Our framework comprises several parts, including a novel camera-in-the-loop optimization strategy that allows us to either optimize a hologram directly or train an interpretable model of the optical wave propagation and a neural network architecture that represents the first CGH algorithm capable of generating full-color high-quality holographic images at 1080p resolution in real time.

Author(s)/Presenter(s):

Yifan Peng, Stanford University, United States of America

Suyeon Choi, Stanford University, United States of America

Nitish Padmanaban, Stanford University, United States of America

Gordon Wetzstein, Stanford University, United States of America

Abstract: Holography is arguably the most promising technology to provide wide field-of-view compact eyeglasses-style near-eye displays for augmented and virtual reality. However, the image quality of existing holographic displays is far from that of current generation conventional displays, effectively making today's holographic display systems impractical. This gap stems predominantly from the severe deviations in the idealized approximations of the ``unknown'' light transport model in a real holographic display, used for computing holograms. In this work, we depart from such approximate ``ideal'' coherent light transport models for computing holograms. Instead, we learn the deviations of the real display from the ideal light transport from the images measured using a display-camera hardware system. After this unknown light propagation is learned, we use it to compensate for severe aberrations in real holographic imagery. The proposed hardware-in-the-loop approach is robust to spatial, temporal and hardware deviations, and improves the image quality of existing methods qualitatively and quantitatively in SNR and perceptual quality. We validate our approach on a holographic display prototype and show that the method can fully compensate unknown aberrations and erroneous and non-linear SLM phase delays, without explicitly modeling them. As a result, the proposed method significantly outperforms existing state-of-the-art methods in simulation and experimentation -- just by observing captured holographic images.

Author(s)/Presenter(s):

Praneeth Chakravarthula, University of North Carolina at Chapel Hill (UNC), United States of America

Ethan Tseng, Princeton University, United States of America

Tarun Srivastava, Princeton University, United States of America

Henry Fuchs, University of North Carolina at Chapel Hill (UNC), United States of America

Felix Heide, Princeton University, United States of America

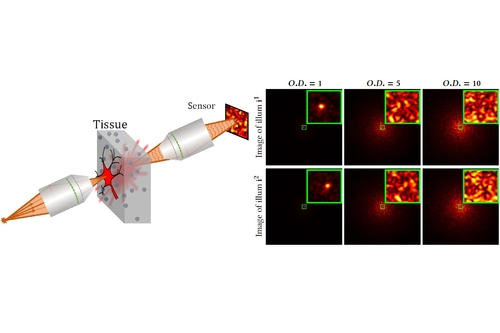

Abstract: We introduce rendering algorithms for the simulation of speckle statistics observed in scattering media under coherent near-field imaging conditions. Our work is motivated by the recent proliferation of techniques that use speckle correlations for tissue imaging applications: The ability to simulate the image measurements used by these speckle imaging techniques in a physically-accurate and computationally-efficient way can facilitate the widespread adoption and improvement of these techniques. To this end, we draw inspiration from recently-introduced Monte Carlo algorithms for rendering speckle statistics under far-field conditions (collimated sensor and illumination). We derive variants of these algorithms that are better suited to the near-field conditions (focused sensor and illumination) required by tissue imaging applications. Our approach is based on using Gaussian apodization to approximate the sensor and illumination aperture, as well as von-Mises Fisher functions to approximate the phase function of the scattering material. We show that these approximations allow us to derive closed-form expressions for the focusing operations involved in simulating near-field speckle patterns. As we demonstrate in our experiments, these approximations accelerate speckle rendering simulations by a few orders of magnitude compared to previous techniques, at the cost of negligible bias. We validate the accuracy of our algorithms by reproducing ground truth speckle statistics simulated using wave-optics solvers, and real-material measurements available in the literature. Finally, we use our algorithms to simulate biomedical imaging techniques for focusing through tissue.

Author(s)/Presenter(s):

Chen Bar, Technion – Israel Institute of Technology, Israel

Ioannis Gkioulekas, Carnegie Mellon University, United States of America

Anat Levin, Technion – Israel Institute of Technology, Israel