Pre-recorded Sessions: From 4 December 2020 | Live Sessions: 10 – 13 December 2020

4 – 13 December 2020

Pre-recorded Sessions: From 4 December 2020 | Live Sessions: 10 – 13 December 2020

4 – 13 December 2020

#SIGGRAPHAsia | #SIGGRAPHAsia2020

#SIGGRAPHAsia | #SIGGRAPHAsia2020

Date/Time:

04 – 13 December 2020

All presentations are available in the virtual platform on-demand.

Lecturer(s):

Sanghun Park, KAIST, Visual Media Lab, South Korea

Kwanggyoon Seo, KAIST, Visual Media Lab, South Korea

Junyong Noh, KAIST, Visual Media Lab, South Korea

Bio:

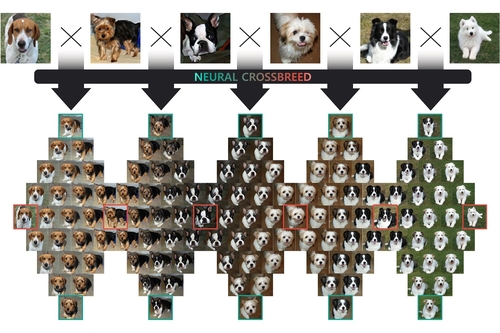

Description: We propose Neural Crossbreed, a feed-forward neural network that can learn a semantic change of input images in a latent space to create the morphing effect. Because the network learns a semantic change, a sequence of meaningful intermediate images can be generated without requiring the user to specify explicit correspondences. In addition, the semantic change learning makes it possible to perform the morphing between the images that contain objects with significantly different poses or camera views. Furthermore, just as in conventional morphing techniques, our morphing network can handle shape and appearance transitions separately by disentangling the content and the style transfer for rich usability. We prepare a training dataset for morphing using a pre-trained BigGAN, which generates an intermediate image by interpolating two latent vectors at an intended morphing value. This is the first attempt to address image morphing using a pre-trained generative model in order to learn semantic transformation. The experiments show that Neural Crossbreed produces high quality morphed images, overcoming various limitations associated with conventional approaches. In addition, Neural Crossbreed can be further extended for diverse applications such as multi-image morphing, appearance transfer, and video frame interpolation.