Pre-recorded Sessions: From 4 December 2020 | Live Sessions: 10 – 13 December 2020

4 – 13 December 2020

Pre-recorded Sessions: From 4 December 2020 | Live Sessions: 10 – 13 December 2020

4 – 13 December 2020

#SIGGRAPHAsia | #SIGGRAPHAsia2020

#SIGGRAPHAsia | #SIGGRAPHAsia2020

Date/Time:

04 – 13 December 2020

All presentations are available in the virtual platform on-demand.

Lecturer(s):

Erika Lu, University of Oxford, United States of America

Forrester Cole, Google Research, United States of America

Tali Dekel, Google Research, United States of America

Weidi Xie, University of Oxford, United Kingdom

Andrew Zisserman, University of Oxford, United Kingdom

David Salesin, Google Research, United States of America

William T. Freeman, Google Research, United States of America

Michael Rubinstein, Google Research, United States of America

Bio:

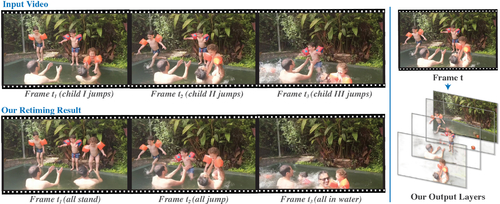

Description: We present a method for retiming people in an ordinary, natural video --- manipulating and editing the time in which different motions of individuals in the video occur. We can temporally align different motions, change the speed of certain actions (speeding up/slowing down, or entirely "freezing" people), or "erase" selected people from the video altogether. We achieve these effects computationally via a dedicated learning-based layered video representation, where each frame in the video is decomposed into separate RGBA layers, representing the appearance of different people in the video. A key property of our model is that it not only disentangles the direct motions of each person in the input video, but also correlates each person automatically with the scene changes they generate---e.g., shadows, reflections, and motion of loose clothing. The layers can be individually retimed and recombined into a new video, allowing us to achieve realistic, high-quality renderings of retiming effects for real-world videos depicting complex actions and involving multiple individuals, including dancing, trampoline jumping, or group running.