Pre-recorded Sessions: From 4 December 2020 | Live Sessions: 10 – 13 December 2020

4 – 13 December 2020

Pre-recorded Sessions: From 4 December 2020 | Live Sessions: 10 – 13 December 2020

4 – 13 December 2020

#SIGGRAPHAsia | #SIGGRAPHAsia2020

#SIGGRAPHAsia | #SIGGRAPHAsia2020

Date/Time:

04 – 13 December 2020

All presentations are available in the virtual platform on-demand.

Lecturer(s):

Philip Davidson, Google Inc., United States of America

Daniel Erickson, Google Inc., United States of America

Yinda Zhang, Google Inc., United States of America

Jonathan Taylor, Google Inc., United States of America

Sofien Bouaziz, Google Inc., United States of America

Chloe Legendre, Google Inc., United States of America

Wan-Chun Ma, Google Inc., United States of America

Ryan Overbeck, Google Inc., United States of America

Thabo Beeler, Google Inc., United States of America

Paul Debevec, Google Inc., United States of America

Shahram Izadi, Google Inc., United States of America

Christian Theobalt, Max-Planck-Institut für Informatik, Germany

Christoph Rhemann, Google Inc., United States of America

Sean Fanello, Google Inc., United States of America

Abhimitra Meka, Google Inc., Max-Planck-Institut für Informatik, United States of America

Rohit Pandey, Google Inc., United States of America

Christian Haene, Google Inc., United States of America

Sergio Orts-Escolano, Google Inc., United States of America

Peter Barnum, Google Inc., United States of America

Bio:

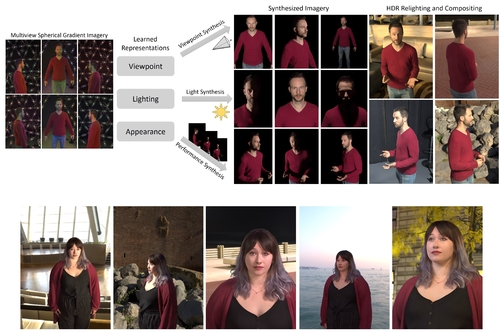

Description: The increasing demand for 3D content in augmented and virtual reality has motivated the development of volumetric performance capture systems such as the Light Stage. Recent advances are pushing free viewpoint relightable videos of dynamic human performances closer to photorealistic quality. However, despite significant efforts, these sophisticated systems are limited by reconstruction and rendering algorithms which do not fully model complex 3D structures and higher order light transport effects such as global illumination and sub-surface scattering. In this paper, we propose a system that combines traditional geometric pipelines with a neural rendering scheme to generate photorealistic renderings of dynamic performances under desired viewpoint and lighting. Our system leverages deep neural networks that model the classical rendering process to learn implicit features that represent the view-dependent appearance of the subject independent of the geometry layout, allowing for generalization to unseen subject poses and even novel subject identity. Detailed experiments and comparisons demonstrate the efficacy and versatility of our method to generate high-quality results, significantly outperforming the existing state-of-the-art solutions.