Pre-recorded Sessions: From 4 December 2020 | Live Sessions: 10 – 13 December 2020

4 – 13 December 2020

Pre-recorded Sessions: From 4 December 2020 | Live Sessions: 10 – 13 December 2020

4 – 13 December 2020

#SIGGRAPHAsia | #SIGGRAPHAsia2020

#SIGGRAPHAsia | #SIGGRAPHAsia2020

Date/Time:

04 – 13 December 2020

All presentations are available in the virtual platform on-demand.

Lecturer(s):

Tiancheng Sun, University of California San Diego, United States of America

Zexiang Xu, University of California San Diego, United States of America

Xiuming Zhang, Massachusetts Institute of Technology, United States of America

Sean Fanello, Google Inc., United States of America

Christoph Rhemann, Google Inc., United States of America

Paul Debevec, Google Inc., United States of America

Yun-Ta Tsai, Google Research, United States of America

Jonathan T. Barron, Google Research, United States of America

Ravi Ramamoorthi, University of California San Diego, United States of America

Bio:

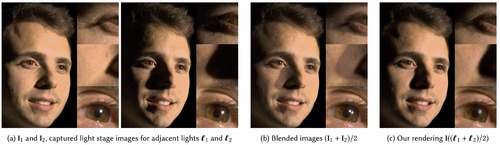

Description: The light stage has been widely used in computer graphics for the past two decades, primarily to enable the relighting of human faces. By capturing the appearance of the human subject under different light sources, one obtains the light transport matrix of that subject, which enables image-based relighting in novel environments. However, due to the finite number of lights in the stage, the light transport matrix only represents a sparse sampling on the entire sphere. As a consequence, relighting the subject with a point light or a directional source that does not coincide exactly with one of the lights in the stage requires interpolation and resampling the images corresponding to nearby lights, and this leads to ghosting shadows, aliased specularities, and other artifacts. To ameliorate these artifacts and produce better results under arbitrary high-frequency lighting, this paper proposes a learning-based solution for the ``super-resolution'' of scans of human faces taken from a light stage.Given an arbitrary ``query'' light direction, our method aggregates the captured images corresponding to neighboring lights in the stage, and uses a neural network to synthesize a rendering of the face that appears to be illuminated by a ``virtual'' light source at the query location.This neural network must circumvent the inherent aliasing and regularity of the light stage data that was used for training, which we accomplish through the use of regularized traditional interpolation methods within our network.Our learned model is able to produce renderings for arbitrary light directions that exhibit realistic shadows and specular highlights, and is able to generalize across a wide variety of subjects. Our super-resolution approach enables more accurate renderings of human subjects under detailed environment maps, or the construction of simpler light stages that contain fewer light sources while still yielding comparable quality renderings as light stages with more densely sampled lights.